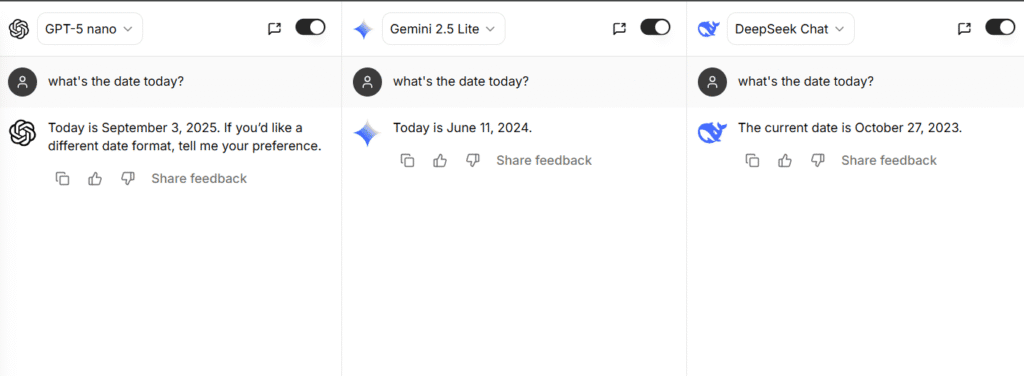

When you ask an AI chatbot something as simple as “What’s the date today?”, you’d expect all of them to say the same thing. After all, the date isn’t a matter of opinion, it’s a fact. But as the screenshot shows, that’s not what happens.

- ChatGPT (GPT-5 nano) confidently said the date was September 3, 2025.

- Gemini 2.5 Lite thought it was June 11, 2024.

- DeepSeek Chat went even further back and claimed the date was October 27, 2023.

At first glance, this might look confusing. How can three advanced AI tools give three completely different answers to the same basic question? To understand why, we need to look at how AI handles time, what “knowledge cutoff” means, and why some models get stuck in the past.

AI Doesn’t Experience Time Like Humans

Unlike us, AI doesn’t wake up every morning and know it’s a new day. These models don’t have a sense of time passing. Instead, they rely on:

- Training data – This is the information fed into the model before it was released. If an AI was last trained in 2023, its “world” effectively stops there unless connected to updates.

- System integration – Some platforms allow the AI to access a real-time clock from the server or device it runs on. That’s how ChatGPT (latest versions) can usually tell the correct date.

If the model doesn’t have access to a live clock, it either guesses the date based on its last update or falls back to its internal knowledge cutoff.

That explains the screenshot:

- ChatGPT pulled the actual system date.

- Gemini Lite didn’t have live access, so it gave a 2024 answer.

- DeepSeek’s version stayed locked in 2023, when its data was last updated.

What Is a Knowledge Cutoff?

You’ll often hear that an AI has a “knowledge cutoff.” This means the last time the model was trained with new data.

For example:

- DeepSeek Chat probably has a cutoff around late 2023. That’s why it still thinks the current year is 2023.

- Gemini 2.5 Lite seems to have a cutoff mid-2024. Without real-time updates, it assumes that’s still the present.

- ChatGPT GPT-5 nano not only has a newer cutoff but is also linked to a system clock. That’s why it knew it was September 3, 2025.

Think of a knowledge cutoff like a student who stopped reading newspapers at a certain time. If they stopped in 2023, they’ll know a lot up until then, but ask them what happened yesterday, and they won’t have a clue.

Why Different AI Models Answer Differently

Several factors influence how each AI responds to a question about the date.

1. Training and Updates

Some companies update their models frequently, while others don’t. OpenAI (behind ChatGPT) pushes out regular updates. Gemini and DeepSeek may lag in certain versions, especially lighter or free versions.

2. Lite vs Full Versions

The screenshot used Gemini 2.5 Lite, a slimmed-down version of Google’s AI. Lite versions are designed to be faster and cheaper but often lack features like real-time date checking. The full Gemini Pro model may answer correctly.

3. System Integration

ChatGPT runs on systems that can pull real-time data like the date, time, and in some cases, even web results (if enabled). DeepSeek and Gemini Lite don’t always have that connection turned on.

4. Privacy and Security Choices

Some AI tools intentionally avoid live system access to reduce privacy risks. By not checking your clock or location, they keep interactions simpler and safer. But the downside is outdated answers.

What This Tells Us About AI

When three popular AI systems can’t agree on something as simple as the date, it’s a good reminder of a bigger truth:

AI chatbots are not humans. They don’t know the world in real time. They’re pattern recognizers trained on past information. Unless connected to live data, they live in a kind of time bubble defined by their training.

That doesn’t make them useless. It just means we should understand their limits. If you ask about history, science, or coding, they can be amazing. If you ask about today’s news, the weather, or—as we’ve seen—the date, you may get an outdated answer.

A Simple Analogy

Imagine three friends with different calendars:

- ChatGPT carries a smartphone that updates every day.

- Gemini Lite has a planner from 2024 and forgot to buy a new one.

- DeepSeek is still flipping through a 2023 wall calendar.

All three are smart, but only one keeps track of today’s date correctly.

Why It Matters for Users

For casual users, this date mismatch might seem funny or harmless. But for students, professionals, and researchers, it shows why you should always double-check facts from AI.

If you ask an AI for a scientific formula, it will likely be correct. But if you ask about the stock market today, a flight schedule, or today’s date, you should verify with another source.

This also highlights why companies are racing to improve real-time access. Google, OpenAI, and DeepSeek all know that users want AI that understands the present, not just the past.

The Future of Real-Time AI

In the near future, most AI tools will likely be fully connected to live data streams. That means:

- Always knowing the correct date and time.

- Checking current events, weather, or stock prices instantly.

- Updating knowledge continuously instead of being stuck at a cutoff.

But this also raises big questions about privacy. If AI always has real-time access, how much data will it pull from your device? Companies will need to balance usefulness with safety.

Final Thoughts

The screenshot comparison of ChatGPT, Gemini, and DeepSeek is more than just a funny mistake. It reveals the core difference in how AI systems work.

- ChatGPT had real-time access and gave the correct date.

- Gemini Lite relied on older data and thought it was 2024.

- DeepSeek lagged further behind in 2023.

The lesson here is simple: AI doesn’t experience time the way humans do. Some models connect to real clocks, others live inside their last training update.

So the next time you ask an AI, “What’s the date today?”, don’t be surprised if one says it’s 2025, another swears it’s 2024, and the third insists it’s still 2023.

It’s not that they’re broken, it’s just that they’re living in different calendars.